Understanding And Using Robots.txt For SEO” is your guide to mastering the often-overlooked aspect of search engine optimization. You’ll discover how this simple text file can control search engine crawlers’ access to your website and help you optimize which pages get indexed. By the end, you’ll know how to create and implement a robots.txt file to improve your site’s visibility and avoid common pitfalls that can hinder your SEO efforts. Have you ever wondered how search engines like Google know which parts of your website to crawl and which to ignore? Understanding and using Robots.txt for SEO is key to managing how search engines interact with your site. While it might sound a bit technical, it’s one of those secrets that, once uncovered, can significantly boost your site’s SEO game. So, buckle up, because we’re about to dive deep into the world of Robots.txt and how you can leverage it for better SEO results.

What is Robots.txt?

First things first, let’s explain what Robots.txt actually is. Think of it as a set of instructions for search engine bots. This tiny, plain text file resides in the root directory of your website and instructs search engines on which pages they should and shouldn’t crawl. It’s like giving a map to a friend with notes on which places they should absolutely visit and which ones to skip.

Why is Robots.txt Important?

The importance of Robots.txt can’t be overstated. It helps you manage how search engines crawl your site, saving precious bandwidth and ensuring that your site isn’t inadvertently penalized for duplicate or low-quality content.

The Basics of Robots.txt Syntax

You don’t need a degree in computer science to understand Robots.txt. The syntax is straightforward, but getting it wrong can lead to disastrous SEO consequences. Let’s break it down, shall we?

User-Agent

The first line in a Robots.txt file generally specifies the user-agent, which is just a way of saying which search engine bot these rules apply to. For example:

User-agent: Googlebot

This line tells the Robots.txt file that the following rules are specifically for Google’s crawler.

Disallow

The Disallow directive tells the bot which directories or pages it should avoid. For instance:

Disallow: /private/

This command instructs bots not to crawl any URL that starts with /private/.

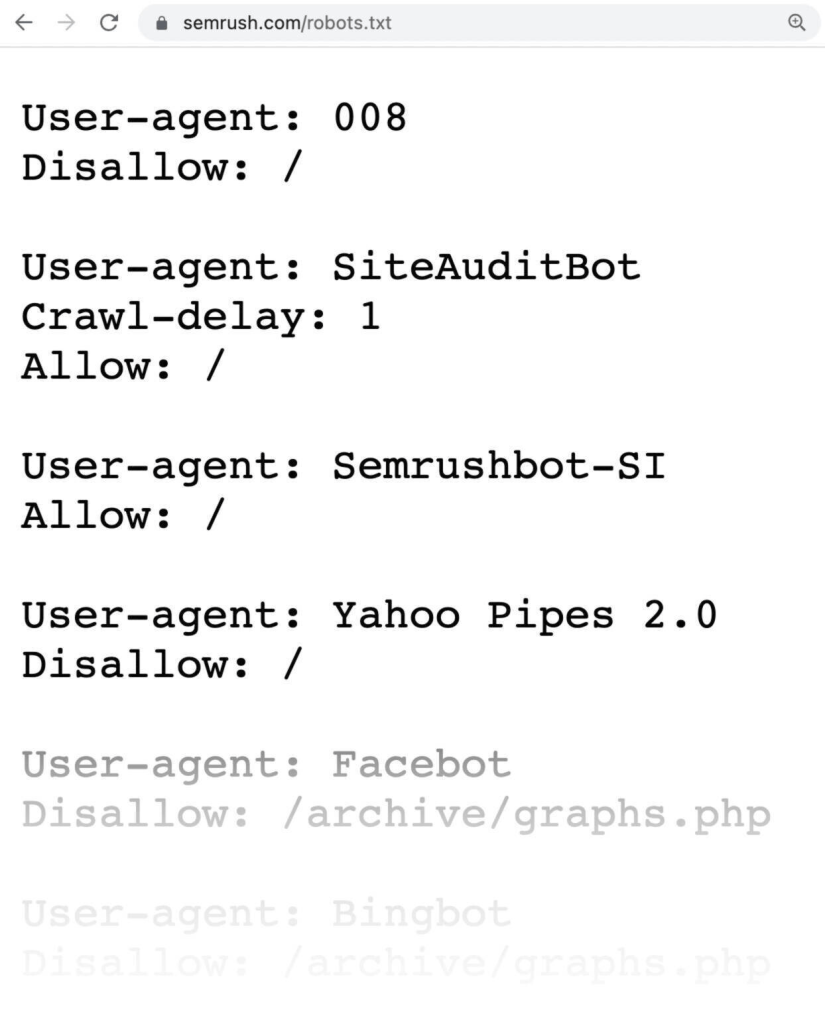

Allow

Conversely, the Allow directive does exactly the opposite; it tells the bot which directories it can crawl. This is especially useful when a subdirectory needs to be accessed within a disallowed parent directory:

Allow: /private/open/

This command would allow bots to crawl /private/open/ but not any other subdirectory under /private/.

Wildcards and Special Characters

Robots.txt also accepts wildcards for more flexible rule-setting. Here are common ones:

-

*– Matches any sequence of characters -

$– Indicates the end of a URL

For example:

Disallow: /*.pdf$

This command tells bots not to crawl any PDF files.

Examples for Common Use-Cases

Here’s a quick table summarizing common scenarios:

| Scenario | Robots.txt Directive |

|---|---|

| Block all bots | User-agent: *Disallow: / |

| Block a specific bot | User-agent: BadBotDisallow: / |

| Allow everything | User-agent: *Allow: / |

| Block specific files | User-agent: *Disallow: /file.html |

Best Practices for Using Robots.txt

Navigating the do’s and don’ts of Robots.txt can be tricky. So, let’s lay down some best practices to make sure you’re getting the most out of this simple yet powerful tool.

Keep it Simple

Complex Robots.txt files can often lead to unintended consequences. Stick to straightforward rules and avoid overcomplicating the directives.

Be Careful with Disallow

While it can be tempting to block everything you think unnecessary, doing so can prevent search engines from indexing useful content. Evaluate carefully before you disallow any directories or pages.

Use Comments

To keep things organized and understandable, especially if you have multiple directives, use comments:

Block access to the entire site

User-agent: * Disallow: /

Test Your Robots.txt

Before you implement your Robots.txt file, using tools like Google’s Robots.txt Tester can help ensure that you won’t be blocking any important pages inadvertently.

Noindex vs. Disallow

It’s crucial to understand the difference between Disallow and noindex. While Disallow prevents pages from being crawled, noindex prevents them from appearing in search results. Combining both can be a strategic move, like so:

User-agent: * Disallow: /no-crawl/ Noindex: /no-crawl/

Advanced Robots.txt Techniques

If you’re feeling confident, let’s delve into some advanced techniques that can give you even more control over how search engines interact with your site.

Crawl-Delay

The Crawl-delay directive can help manage the crawl rate. This is particularly useful for large sites that may suffer performance issues from too frequent crawling:

User-agent: * Crawl-delay: 10

This command asks bots to wait 10 seconds between requests.

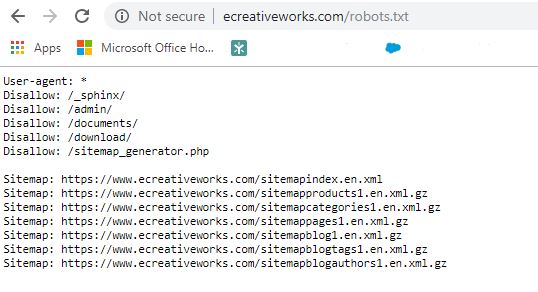

Sitemap Reference

Including a sitemap in your Robots.txt can guide search engines to the most crucial parts of your site:

Sitemap: http://www.yoursite.com/sitemap.xml

Blocking Query Parameters

Often, query parameters can create duplicate content issues. You can disallow these through Robots.txt:

User-agent: * Disallow: /?

Blocking Specific Bots

If you’re dealing with bad bots or crawlers that you don’t want on your site, you can block them using their user-agent name:

User-agent: BadBot Disallow: /

Common Mistakes to Avoid

Mistakes in Robots.txt files can lead to serious SEO problems. Here are some pitfalls to watch out for.

Blocking CSS and JS Files

At one point, it was common to block CSS and JS files. However, search engines now use these for rendering pages. Avoid disallowing them unless you have a very good reason.

Poorly Testing Robots.txt

Always test your Robots.txt file. Google and other search engines provide tools specifically designed for this. Poorly tested files can easily block critical parts of your site.

Ignoring Updates

Search engines periodically update their guidelines. Make sure to stay updated and modify your Robots.txt file accordingly.

Real-World Examples

Seeing real-world Robots.txt files can sometimes be the best way to understand how to use them effectively.

Example from a Blog

If you run a blog, you might want to keep your admin pages and certain archives out of search engine results:

User-agent: * Disallow: /wp-admin/ Disallow: /tag/ Disallow: /author/ Allow: /wp-admin/admin-ajax.php

Example from an E-commerce Site

For an e-commerce site, you might want to prevent indexing of sorting or filtering result pages:

User-agent: * Disallow: /cart/ Disallow: /checkout/ Disallow: /search/

How to Create and Manage Robots.txt

Creating a Robots.txt file is straightforward. Here’s a step-by-step on how to do it.

Step-by-Step Guide

- Open a Text Editor: Use any plain text editor (Notepad, TextEdit, etc.)

- Write Your Directives: Add your user-agent and disallow rules as needed.

- Save as Robots.txt: Save the file as

Robots.txt. - Upload to Your Root Directory: Use FTP or your web host’s file manager to upload the file to the root directory (e.g., www.yoursite.com/robots.txt).

Regular Maintenance

Keep an eye on your Robots.txt file and revisit it regularly to ensure it still meets your needs. Changes in site structure or SEO strategy should prompt a review of this critical file.

The Role of Robots.txt in Overall SEO Strategy

You might be wondering, how does this tiny file fit into the grand scheme of your SEO strategy? Well, it plays a more significant role than you might think.

Enhances Crawl Efficiency

By guiding search engines on where they should focus, you’re ensuring that their crawl budget is wisely spent. This means that more of your valuable content gets indexed.

Manages Duplicate Content

Duplicate content is an SEO nightmare. Use Robots.txt to prevent search engines from crawling duplicate or low-quality pages, thus ensuring a cleaner, more effective SEO performance.

Improves Server Performance

Less unnecessary crawling means improved server performance. Your website will run smoother, providing a better user experience.

Enhances Site Architecture Understanding

By clearly defining the structure of your site through Robots.txt, you’re helping search engines understand your site architecture, which is crucial for effective indexing and ranking.

Conclusion

Understanding and using Robots.txt for SEO may sound like daunting technical mumbo jumbo, but it’s essentially an art form that, when mastered, can significantly elevate your SEO strategy. Whether it’s managing crawl budgets, preventing duplicate content, or simply keeping certain parts of your site private, Robots.txt is your go-to tool.

So, go ahead, open your text editor, and start crafting that perfect Robots.txt file that will let search engines know that your site means business. Don’t forget to regularly review and test it to adapt to any changes in your site or search engine guidelines. Your future, SEO-optimized self will thank you!